Happy New Year!

It’s mid January, and I am happy to report that so far, I’m doing AWESOME with my resolutions. One of which is to get better at meal planning and prepping for my (ahem!) difficult family.

My daughter is vegetarian on her way to becoming vegan. My husband is trying to lose a few holiday pounds and is doing keto at the moment. As for myself, I tend to be anemic and am always looking for recipes that are high in iron and vitamin C.

Finding a variety of meals that satisfy everyone’s preferences isn’t easy, and I thought this would be a good challenge for ChatGPT. I spent the weekend figuring the right prompts, and was pretty excited about the results.

Then, of course, my mind went immediately to: This could be an (APEX) app!

It certainly wouldn’t be the first time I used Oracle APEX to help me solve a challenge in my personal life. I’ve used it to run a fitness challenge, get my kids reading, generate fun math drills for my daughter, and much more.

But read on to see how I used Oracle APEX to create a simple GPT3 app to help me feed my family.

And if you want to try it out for yourself, click here! (demo/demo2023)

1. Get your OpenAI API key

This may or may not be straightforward! ChatGPT has been ‘at capacity’ from time to time lately. My husband tried to sign up a few days ago and got the following:

As far as we both can tell, the notification doesn’t work. The email never came, but he has since been able to sign up. Be patient, my friend. And don’t wait for the email.

Get started at the OpenAI website here. Once you’ve signed up, you’ll want to create a new secret key under the USER settings of your account.

2. Understand the OpenAI API and its response

Once you’ve signed up for OpenAI, the first place you’ll want to go is the OpenAI Playground. This is where you can try out different calls to the OpenAI completions endpoint: https://api.openai.com/v1/completions

You’ll immediately see many of the parameters you can use (model, temperature, max-length, etc). Take your time trying out different prompts and playing with the settings. It’ll give you a good sense of how they work and how you might be able to leverage them in your application.

The following parameters are required when making a POST request to the OpenAI API completions endpoint:

prompt: This parameter is the input text for the GPT-3 model. This is the text that the model will use to generate a response.model: This parameter specifies the GPT-3 model that you want to use.

All other parameters are optional.

It’s important to point out that if you don’t set the completions parameter the API will return 1 completion by default, and if you don’t set the max_tokens parameter the API will return 2048 tokens (maximum number of words) by default.

Tips for the best prompt for your OpenAI call

After playing with ChatGPT and the OpenAI playground for a few days now, all I can say is get specific. We’re used to keyword searches in Google, but this is a completely different ballgame. The more information you give the AI, the better your response will be. I’ve watched a few videos and read a few posts about effective prompts.

Here’s a post that gives you an idea of the sorts of things you might use as your prompt. Some might surprise you, others will certainly get you thinking about all the possibilities..

As a reminder, OpenAI has been trained on data up to 2021. It has no knowledge of events after 2021.

I did ask the AI for some tips about regarding prompts:

- Be clear and specific: When creating your prompt, make sure to be clear and specific about what you want the API to generate. The more specific your prompt is, the more accurate and useful the API’s response will be.

- Use context: If your prompt is part of a larger conversation or context, make sure to include that context in the prompt. This will help the API understand the intent of your request and generate a more accurate response.

- Be concise: Keep your prompts short and to the point. Longer prompts can be more difficult for the API to understand, and may result in less accurate responses.

- Use examples: If you’re unsure how to phrase your prompt, try using examples of the kind of response you’re looking for. This will help the API understand the intent of your request and generate a more accurate response.

- Avoid asking too many questions: Asking too many questions in one prompt can be confusing for the API and can result in less accurate responses. Try to focus on one specific question or task in each prompt.

- Use the right model: Different models are better suited for different tasks. Make sure to choose the right model for your task and use the latest version if possible.

- Test different variations: Try testing different variations of your prompt to see what results in the most accurate response. Keep in mind that the API can generate different responses based on the same prompt, due to its randomness feature.

Which OpenAI model should you choose?

Which model to use depends on the specific use case you have in mind. Here’s a brief overview of the main models currently available:

text-davinci-003: This is the latest and most powerful GPT-3 model, it can perform a wide range of natural language tasks, including language translation, summarization, question answering, and more. It’s the best option when you want to generate human-like responses, or you want to perform a specific task and you want to use the best model available.text-davinci-002: This model is the previous version oftext-davinci-003, and it’s also a very powerful model that can perform a wide range of natural language tasks. It’s a good option when you want to use a stable version of the model, or when you want to use a more cost-effective version of the model.text-curie-001: This model is optimized for answering questions, and it’s a good option when you want to use the API to answer questions.text-babbage-001: This model is optimized for coding, and it’s a good option when you want to use the API to generate code or write comments on code.text-bart-001: This model is optimized for text summarization, and it’s a good option when you want to use the API to summarize long documents.text-code-001: This model is optimized for code generation, and it’s a good option when you want to use the API to generate code for different languages.

If you’re not sure which model to use, you can start with text-davinci-003 and then test other models as needed.

So far, I have only played with text-davinci-003.

The OpenAI JSON Response

The response of a call to the OpenAI completions endpoint is in JSON format. Here’s a sample response from a call I made asking for meal plan recommendations:

{

"id": "cmpl-6YjiDBVI97602ZVr5qw3XAXqmRLpd",

"object": "text_completion",

"created": 1673736889,

"model": "text-davinci-003",

"choices": [

{

"text": "\n\nDay 1\n\nBreakfast: Spinach and Mushroom Omelette with Avocado\n\nLunch: Grilled Chicken Salad with Olive Oil and Lemon Juice\n\nDinner: Zucchini Noodles with Ground Beef and Marinara Sauce\n\nDay 2\n\nBreakfast: Coconut Flour Pancakes with Sliced Apples\n\nLunch: Baked Salmon with Broccoli and Asparagus\n\nDinner: Cauliflower Rice with Ground Turkey and Peppers\n\nDay 3\n\nBreakfast: Greek Yogurt with Walnuts and Berries\n\nLunch: Cobb Salad with Hard Boiled Egg and Avocado\n\nDinner: Kale and Mushroom Frittata with Roasted Potatoes",

"index": 0,

"logprobs": null,

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 34,

"completion_tokens": 152,

"total_tokens": 186

}

}

I can immediately see that the piece I will likely want to use in my app is the data from the “text” field. Because my call to the endpoint included a single completion, I got one ‘choice’ element only. Had I asked for more completions, there would have been an array of choices with their corresponding text.

3. PL/SQL package to make the OpenAI REST API call

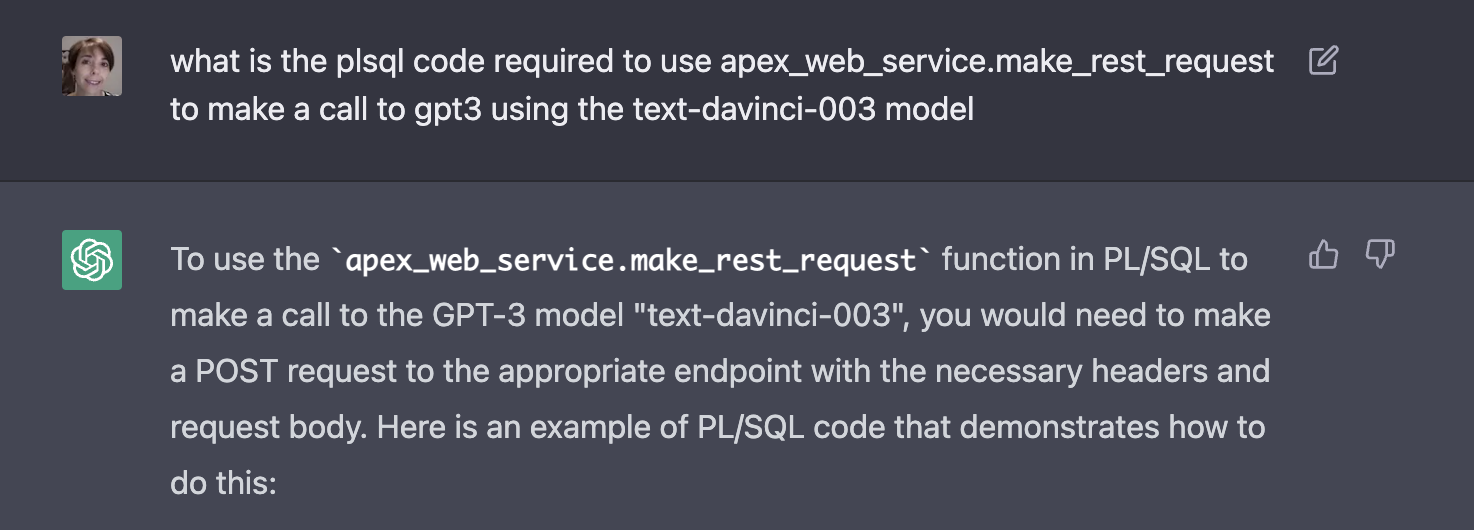

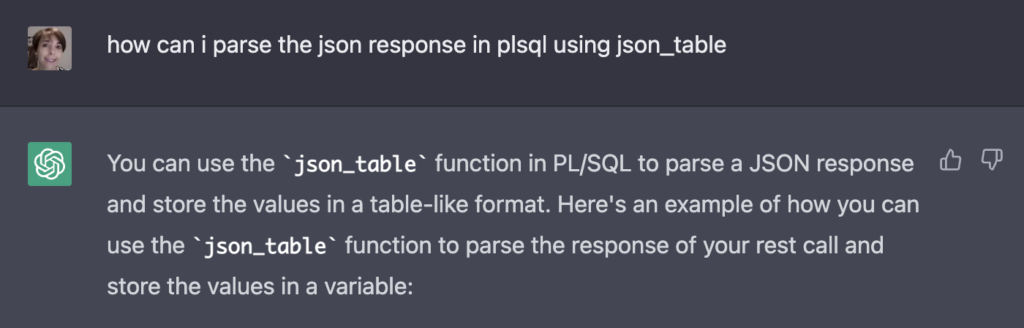

I’m not gonna lie. It’s Sunday, and I am trying to move quickly. I was also curious, so I gave ChatGPT the following prompt:

And ChatGPT gave me the following code:

declare

l_request_text varchar2(4000) := '{"prompt": "What is GPT-3?", "model": "text-davinci-003"}';

l_response clob;

begin

l_response := apex_web_service.make_rest_request(

p_url => 'https://api.openai.com/v1/engines/davinci/completions',

p_http_method => 'POST',

p_body => l_request_text,

p_headers => 'Content-Type: application/json',

p_username => ''

);

-- Do something with the response here

dbms_output.put_line(l_response);

end;

Did I then ask for the PLSQL code to help me parse the resulting JSON using JSON_TABLE? Maybe. Maybe not.

For the curious among you, here is our conversation:

declare

l_request_text varchar2(4000) := '{"prompt": "What is GPT-3?", "model": "text-davinci-003"}';

l_response clob;

l_json_table json_table;

begin

l_response := apex_web_service.make_rest_request(

p_url => 'https://api.openai.com/v1/engines/davinci/completions',

p_http_method => 'POST',

p_body => l_request_text,

p_headers => 'Content-Type: application/json',

p_username => ''

);

-- Do something with the response here

select * into l_json_table from json_table(l_response, '$.choices[*]'

columns (

value varchar2(4000) path '$.text'

)

);

-- Now you can use the l_json_table variable as a table

for i in (select * from l_json_table)

loop

dbms_output.put_line(i.value);

end loop;

end;

The only thing I could do then was humbly say thank you..

So my code isn’t quite ready, I need to put it in a package, handle errors, and decide how exactly I am going to use it, but I am pretty darn close.

My final package included a procedure that was tweaked slightly from the OpenAI output, mainly to look at the response status code (200 ok or not).

create or replace package chatgpt_pkg is

/*

Purpose: PL/SQL package for OpenAI API

Remarks: Allows us to post requests to OpenAI API

Who Date Description

—— ———- ————————————-

MS 14.1.2023 Created package

*/

procedure get_completion (

in_parm1 in varchar2,

out_plan out clob

);

end chatgpt_pkg;

create or replace package body chatgpt_pkg is

g_gpt_api_url constant varchar2(255) := ‘https://api.openai.com/v1/completions’;

g_api_secret constant varchar2(255) := ‘YOUR API KEY HERE’;

procedure get_completion (

in_parm1 in varchar2,

out_plan out clob

)

is

l_result clob;

/* the parameters in the request could/should be variables for a more flexible call */

l_request_text varchar2(4000) := ‘{“prompt”: “‘||in_parm1||'”, “model”: “text-davinci-003″,”temperature”:0.7,”max_tokens”:2000}’;

l_json_table clob;

l_status_code number;

begin

apex_web_service.g_request_headers.delete(); — clear the header

apex_web_service.g_request_headers(1).name := ‘Content-Type’;

apex_web_service.g_request_headers(1).value := ‘application/json’;

apex_web_service.g_request_headers(2).name := ‘Authorization’;

apex_web_service.g_request_headers(2).value := ‘Bearer ‘||g_api_secret;

l_result := apex_web_service.make_rest_request(

p_url => g_gpt_api_url,

p_http_method => ‘POST’,

p_body=>l_request_text

);

— Get the status code from the response

l_status_code := apex_web_service.g_status_code;

— Raise an exception if the status code is not 200

IF l_status_code != 200 THEN

raise_application_error(-20000, ‘API request failed with status code: ‘ || l_status_code);

END IF;

for i in (select * from json_table(l_result, ‘$.choices[*]’

columns (

value varchar2(4000) path ‘$.text’

)

))

loop

out_plan:=out_plan||i.value;

end loop;

end get_completion;

end chatgpt_pkg;

4. Oracle APEX page to call OpenAI

From playing around with the AI, I’ve learned a thing or two about how to get the sort of results I am looking for. It took me a while to get there though! That is where I see one of the big values of creating applications on top of the API. People generally don’t yet know how to formulate requests or what prompts work best.

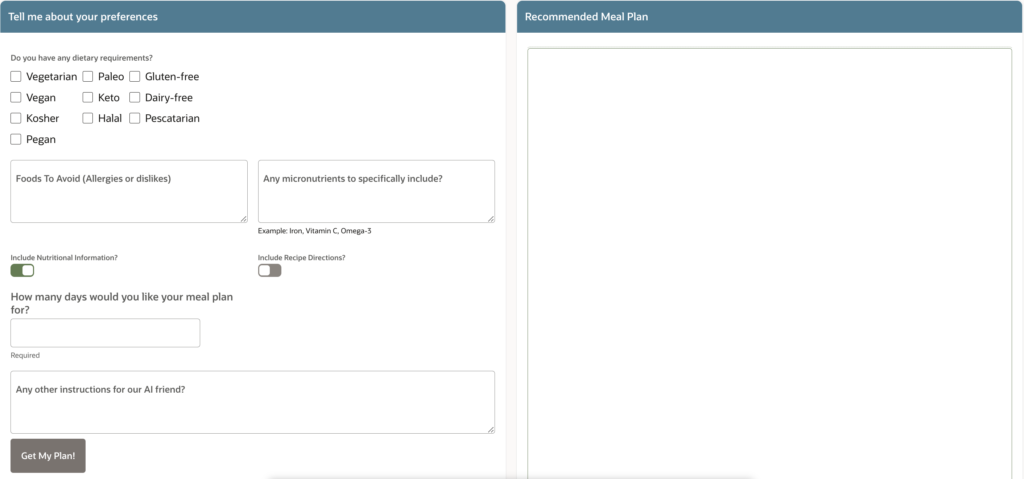

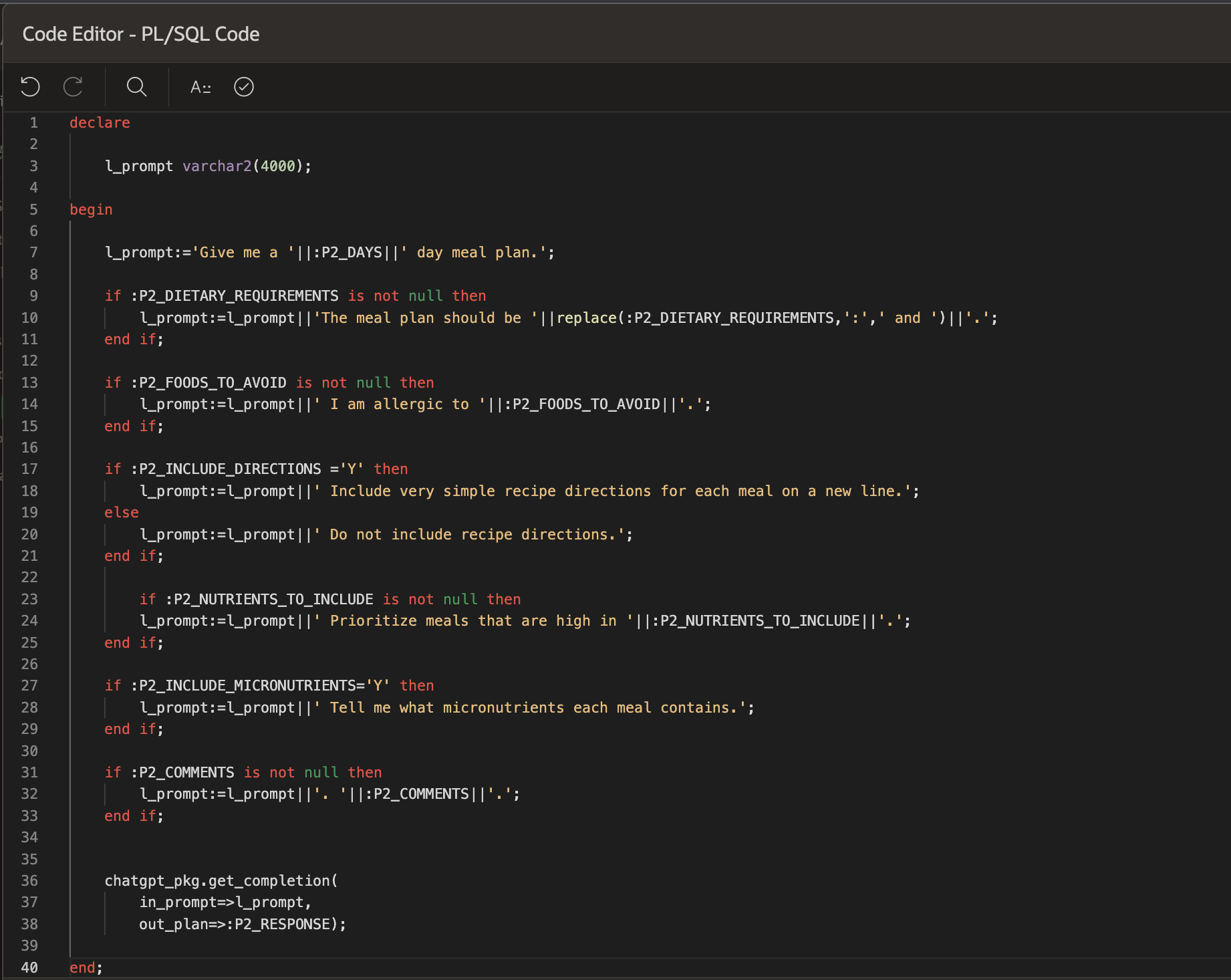

So my APEX page basically has a series of fields with which I build up the OpenAI prompt on the back end.

The big blank section on the right will return the results into :P2_RESPONSE.

The page has a single process that is called when the user clicks on the Get My Plan! button

And that’s it! My process looks at the user’s answers and builds up an appropriate prompt to feed the AI. The API then returns the response into the Meal Plan field.

I am so excited about the possibilities of this type of integration and look forward to doing much more in the near future. I’d love to hear what sort of applications you’ve built or are looking forward to building. Drop them in the comments!

But for now, I must go back to my meal prep. My son has just told me we don’t eat enough meat and wants me to enter ‘Vegetarian’ and ‘More meat’. I am afraid even GPT3 can’t help me solve this problem….

If you want to try out the app yourself, click here. (demo/demo2023)

Expert Oracle APEX Development

Our team at Insum has dozens of certified and experienced Oracle APEX Developers to help you execute your projects. Contact us today!

It looks great !! Please share the credentials for accessing your app

Username: demo

Password: demo2023

Username and password?

Sorry! demo/demo2023